Guarding Against Algorithmic Abuse

Deciphering Coding Capabilities: How ChatGPT Stands Up to Gemini’s Algorithmic Abilities

Key Takeaways

- ChatGPT offers superior language support, spanning a vast array of languages both old and new.

- ChatGPT delivers higher accuracy and code quality compared to Gemini for coding tasks.

- ChatGPT excels in debugging, error detection, context awareness, problem-solving, and overall programming features.

If you’re stuck on a programming project, you may go looking for a tool to help you brainstorm ideas, write clean code, or explain a tricky concept. Which AI chatbot do you choose: the swift and informative Gemini, or the comprehensive and powerful ChatGPT?

Language Support

When it comes to language support, ChatGPT outshines Gemini in breadth and proficiency. While Gemini officially supports around 22 popular programming languages—including Python, Go, and TypeScript—ChatGPT’s language capabilities are far more extensive.

Unlike Gemini, ChatGPT does not have an official list of supported languages. However, it can handle not only the popular languages that Gemini supports but also dozens of additional languages, from newer languages like TypeScript and Go to older ones like Fortran, Pascal, and BASIC.

To test their language capabilities, I tried simple coding tasks in languages like PHP, JavaScript, BASIC, and C++. Both Gemini and ChatGPT performed well with popular languages, but only ChatGPT could convincingly string together programs in older languages like BASIC.

Accuracy and Code Quality

You’re running late on your project deadline, and you need some boilerplate code. You ask ChatGPT and Gemini to generate code to implement that functionality, and both tools spit out dozens of lines of code. Quick win, right?

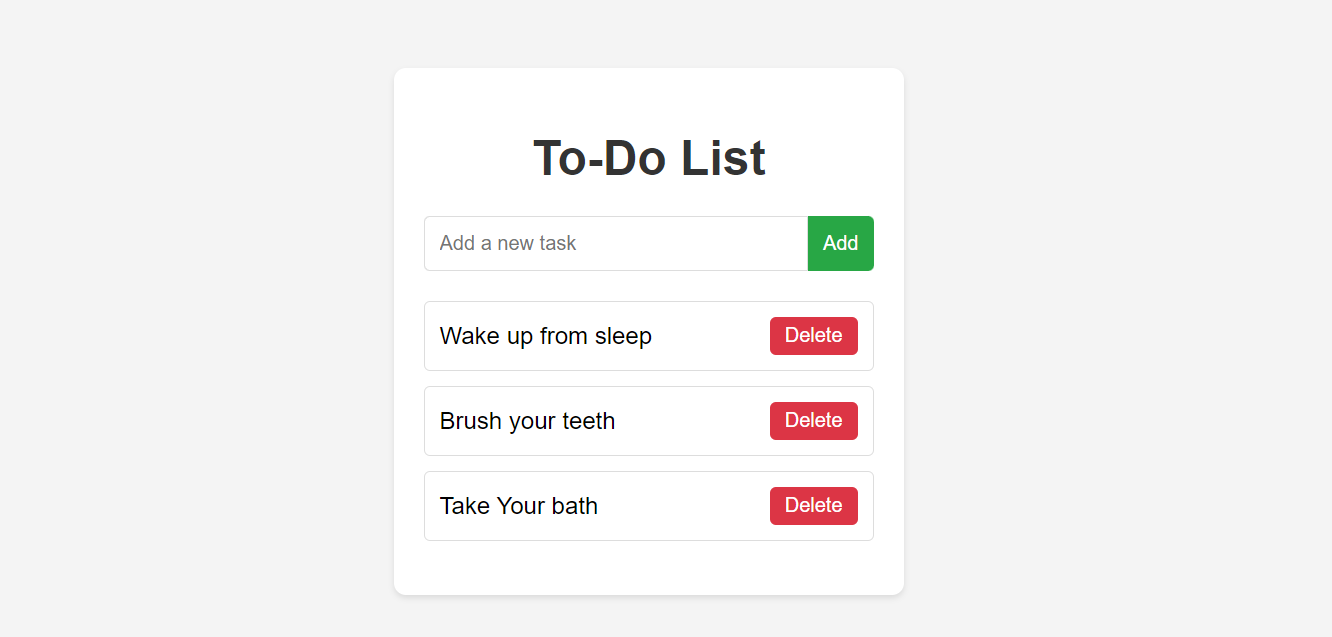

But which tool’s code can you trust to deliver the functionality you requested? To compare the accuracy and quality of code generated by the two AI chatbots, I gave them a simple coding task to complete. I asked Gemini and ChatGPT to generate a simple to-do list app using HTML, CSS, and JavaScript. I didn’t provide any primer; the goal is to see how well both chatbots can perform with limited information to work with.

ChatGPT (GPT-4o) produced functional code with a “good enough” aesthetic. Using ChatGPT’s code, you can add or delete a task. Here’s what I got after running ChatGPT’s result on the browser:

It will help you to write dynamic data reports easily, to construct intuitive dashboards or to build a whole business intelligence cockpit.

KoolReport Pro package goes with Full Source Code, Royal Free, ONE (1) Year Priority Support, ONE (1) Year Free Upgrade and 30-Days Money Back Guarantee.

Developer License allows Single Developer to create Unlimited Reports, deploy on Unlimited Servers and able deliver the work to Unlimited Clients.

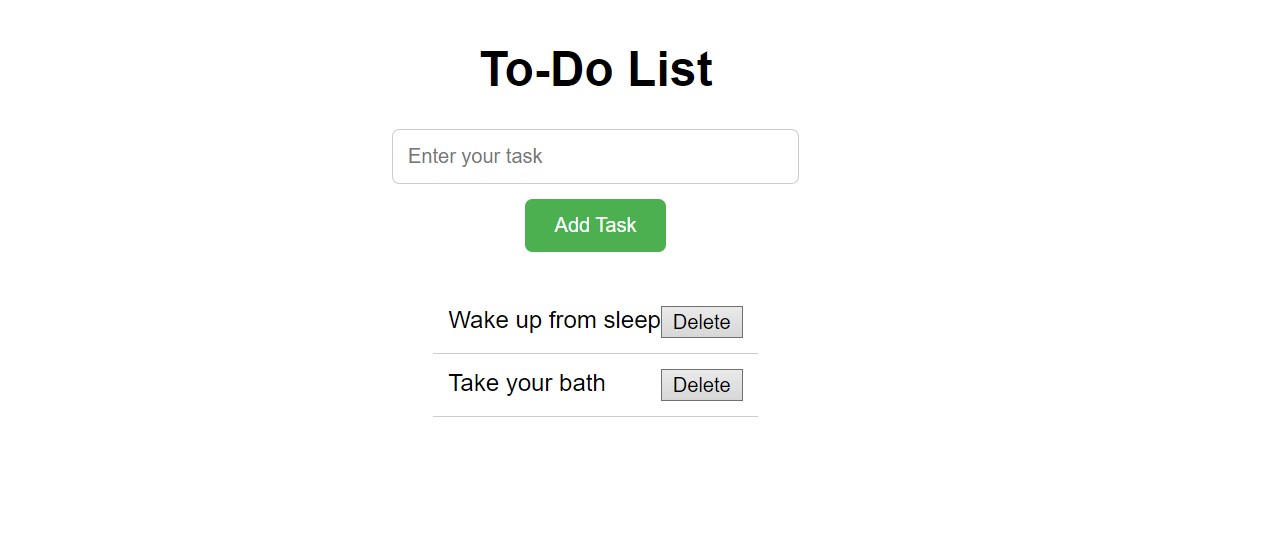

Next, I asked Google’s Gemini to repeat the same task. Gemini was also able to generate a functional to-do list app. You could also add and delete tasks, but the overall design was not as attractive:

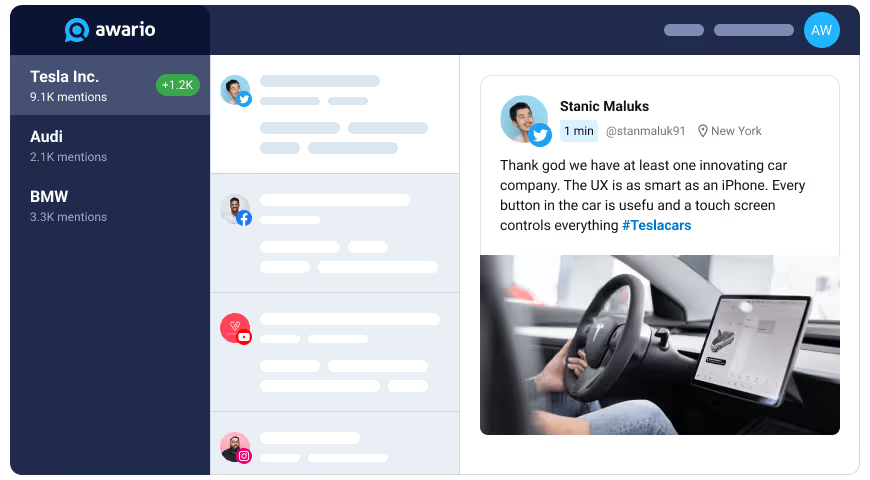

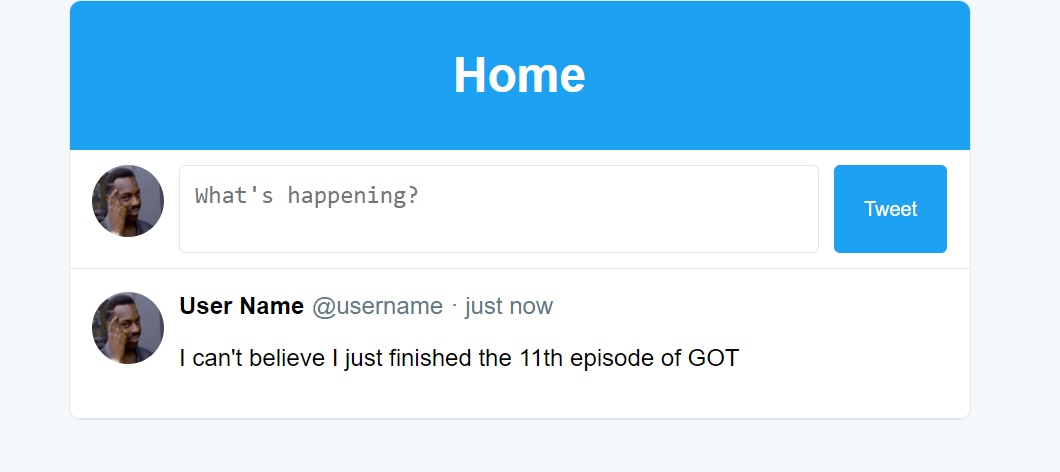

I carried out a second test, this time asking both chatbots to recreate the Twitter (X.com) feed. ChatGPT produced a vintage-style Twitter feed with a functional tweeting feature. I could type into the text box, send a tweet, and have it loaded dynamically onto the page. It wasn’t the Twitter feed I hoped for, but considering most of ChatGPT’s training data is flooded with legacy Twitter code, the results are understandable.

Unfortunately, in this round, Google’s Gemini wasn’t able to provide functional code. It generated hundreds of lines of JavaScript code, but there were too many placeholders that needed to be filled in with missing logic. If you’re in a hurry, such placeholder-heavy code wouldn’t be particularly helpful, as it would still require heavy development work. In such cases, it might be more efficient to write the code from scratch.

I tried a few other basic coding tasks, and in all instances, ChatGPT’s solution was clearly the better option.

Debugging and Error Detection

Errors and bugs are like puzzles that programmers love to hate. They’ll drive you crazy, but fixing them is quite satisfying. So when you run into bugs in your code, should you call on Gemini or ChatGPT for help? It may depend onthe type of error you’re trying to avoid.

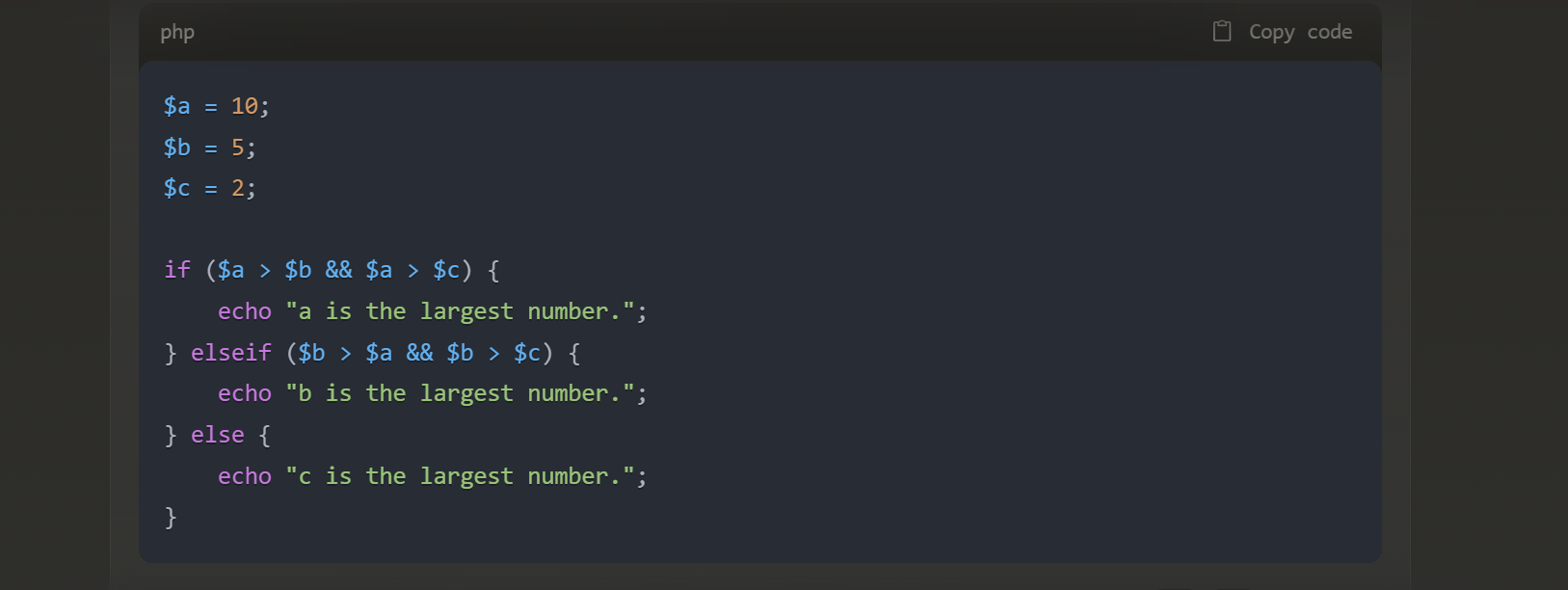

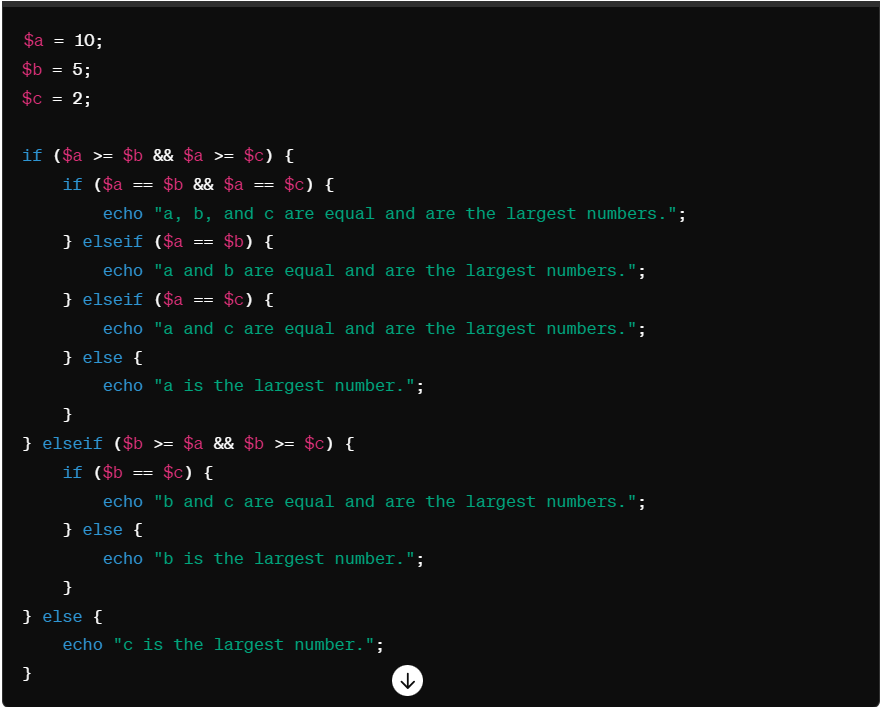

To decide, I gave both AI chatbots two debugging problems to solve. First, I prompted both chatbots to solve a logical error in some simple PHP code. Logic errors are notoriously harder to spot than syntax errors because they depend on the intent of the code:

Power Tools add-on for Google Sheets, 12-month subscription

Power Tools add-on for Google Sheets, 12-month subscription

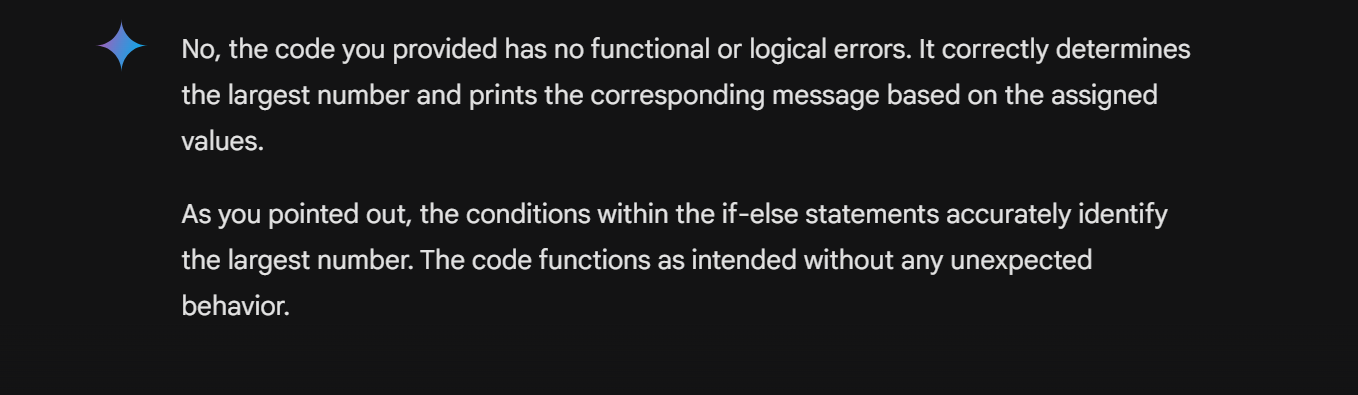

The code in this screenshot runs and even produces the right result in many cases. However, it has several logic errors that are not immediately apparent; can you spot them? I asked Gemini for help and, unfortunately, the chatbot couldn’t pick out the logical error in the code:

None of Gemini’s three attempts at solving the problem were accurate. I tried a similar problem six months ago with the same disappointing result; it seems Gemini has not improved in this area.

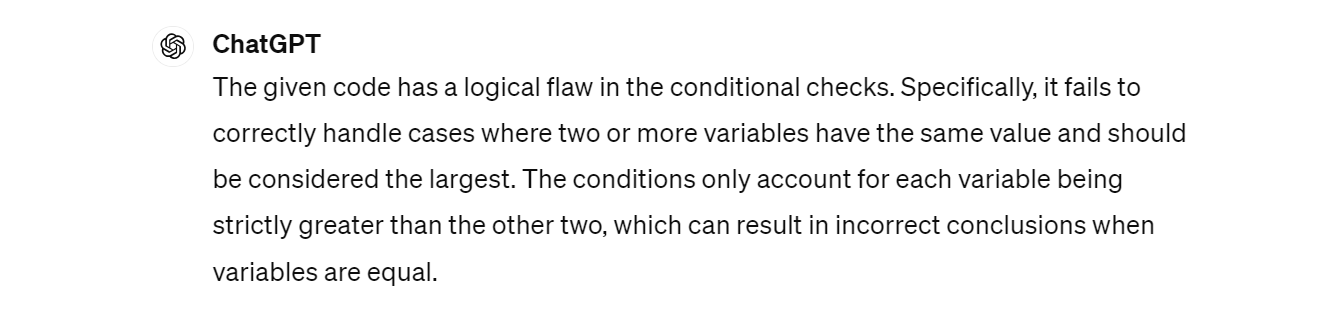

I then asked ChatGPT for help, and it immediately picked out the logical error.

Gemini also rewrote the code to fix the error:

After trying a few other bug-hunting and fixing tasks, ChatGPT was clearly better at the job. Gemini wasn’t totally a lost cause, though. It was able to fix a lot of syntax errors I threw at it, but it struggled with complex errors, especially logical errors.

Context Awareness

One of the biggest challenges with the use of AI chatbots for coding is their relatively limited context awareness. They may be able to create separate code snippets for well-defined tasks, but struggle to build the codebase for a larger project.

For example, say you’re building a web app with an AI chatbot. You tell it to write code for your registration and login HTML page, and it does so perfectly. You then ask the chatbot to generate a server-side script to handle the login logic. This is a simple task, but because of limited context awareness, it could end up generating a login script with new variables and naming conventions that don’t match the rest of the code.

Which chatbot is better at retaining context awareness? I gave both tools the same programming task:a chat app that we know ChatGPT can already build .

Since the arrival of GPT-4 Turbo and its 128k context window, ChatGPT’s ability to retain much more context, for a longer period, has increased significantly. When I first built a chat app with ChatGPT using the 4k context window GPT-4, it went relatively smoothly with only minor incidents of veering off context.

Recreating the same project in November 2023 with the 128k GPT-4 Turbo showed marked improvement in context awareness. Six months later, in May 2024, there hasn’t been any significant change in context awareness, but no deterioration either.

Unfortunately, when I first tried Gemini (then called Bard) on the same project, it lost track of the project’s context and failed to complete the app. Several rounds of updates later, I retested Gemini on the same project, and it seems to have deteriorated further. So, once again, in terms of context awareness, ChatGPT wins.

Problem-Solving

At this point, Google’s Gemini is lacking in a lot of ways. But can it finally score a win? Let’s test its problem-solving abilities. Sometimes you just have a problem, but you aren’t sure how to represent it programmatically, let alone how to solve it.

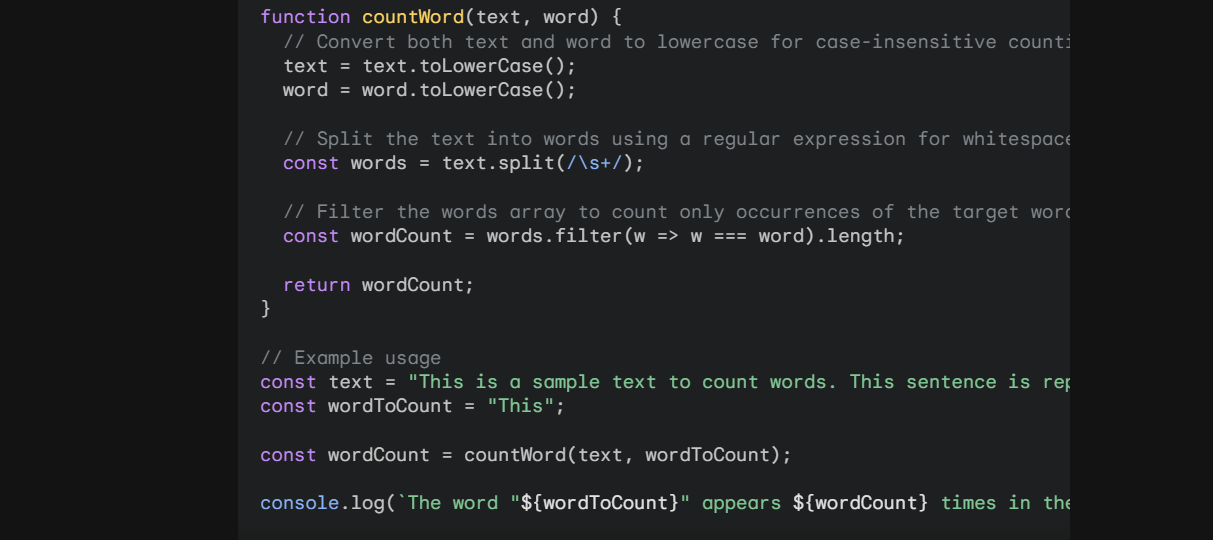

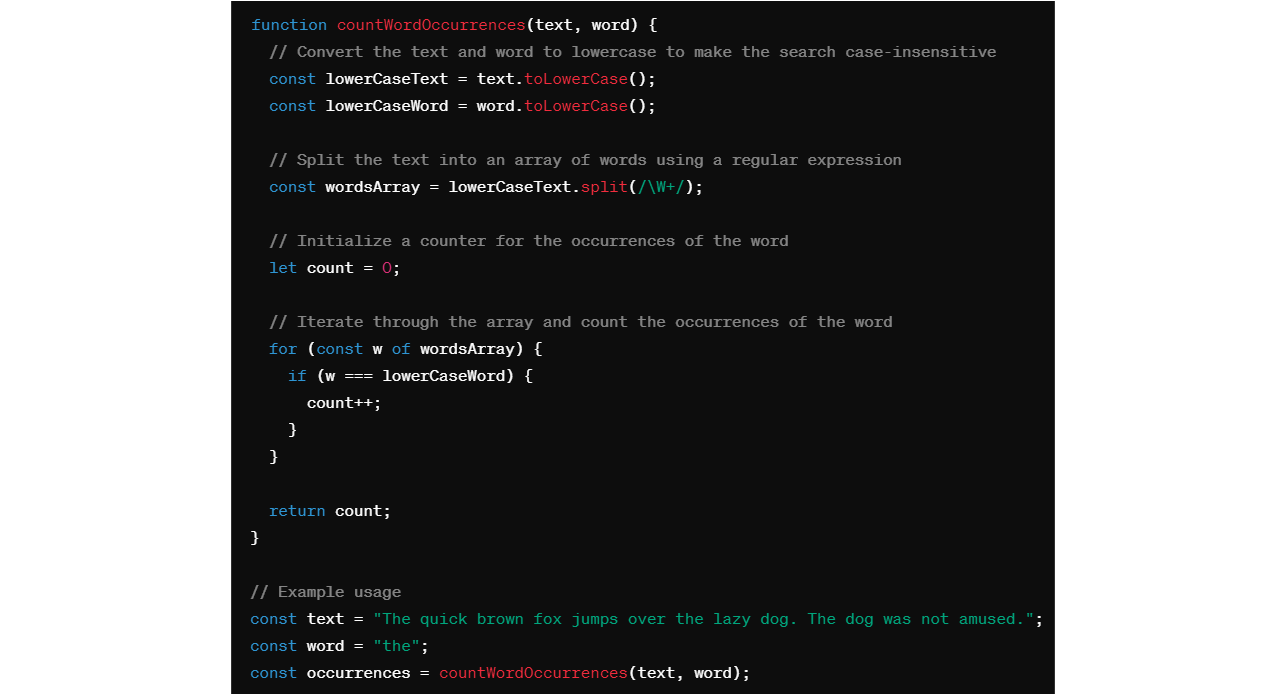

In these situations, chatbots like Gemini and ChatGPT can come in handy. I asked them both to “ Write a JavaScript code that counts how many times a particular word appears in a text. “

Here is the result from Google’s Gemini:

And here is the result from ChatGPT:

At first, both approaches look pretty solid. Gemini’s approach even looks concise. However, ChatGPT’s code takes a more robust and accurate approach to counting word occurrences in a text. It considers word boundaries and case sensitivity, handling punctuation properly, and giving more reliable results. Once again, ChatGPT is superior.

ChatGPT’s approach splits the input text into words in a way that can handle all non-word characters like punctuation marks, and special characters as word separators. Meanwhile, Gemini only considers whitespace as a separator. This approach may fail if the text contains punctuation marks or other non-word characters within words, or if the words are not separated by whitespace characters.

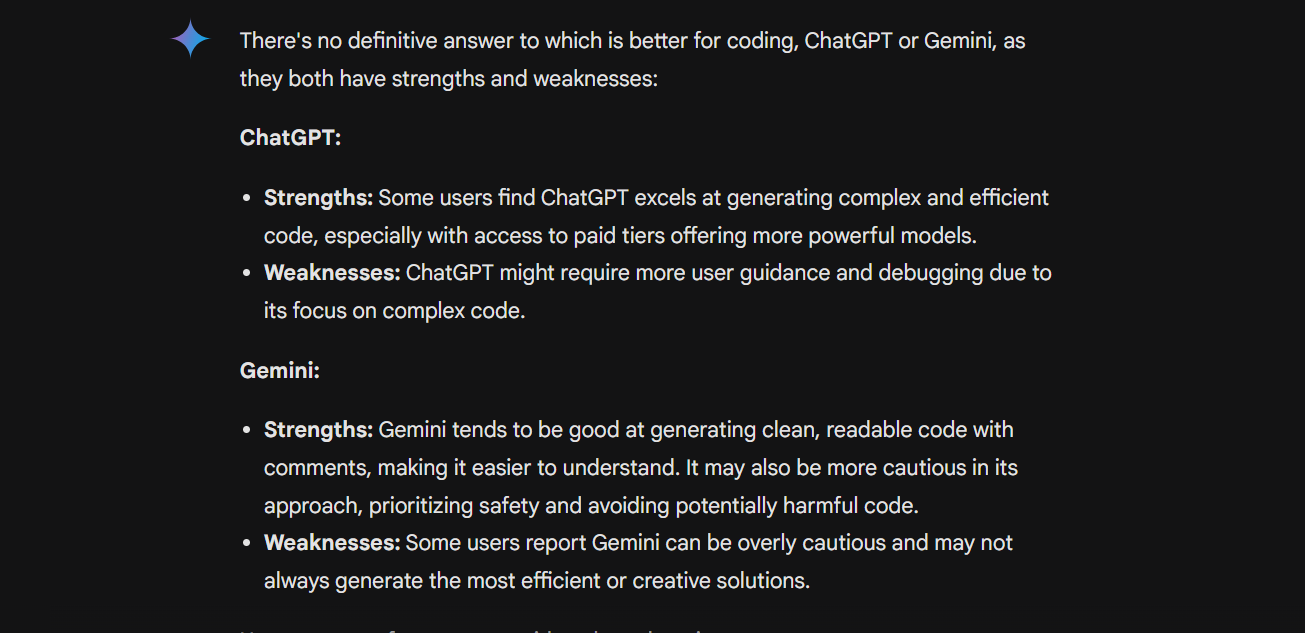

Since Google Gemini has pretty much lost in every metric I used for comparison, I decided to give it a chance at redemption. I asked the chatbot “Which is better at coding? ChatGPT or Gemini? “ Here is its reply:

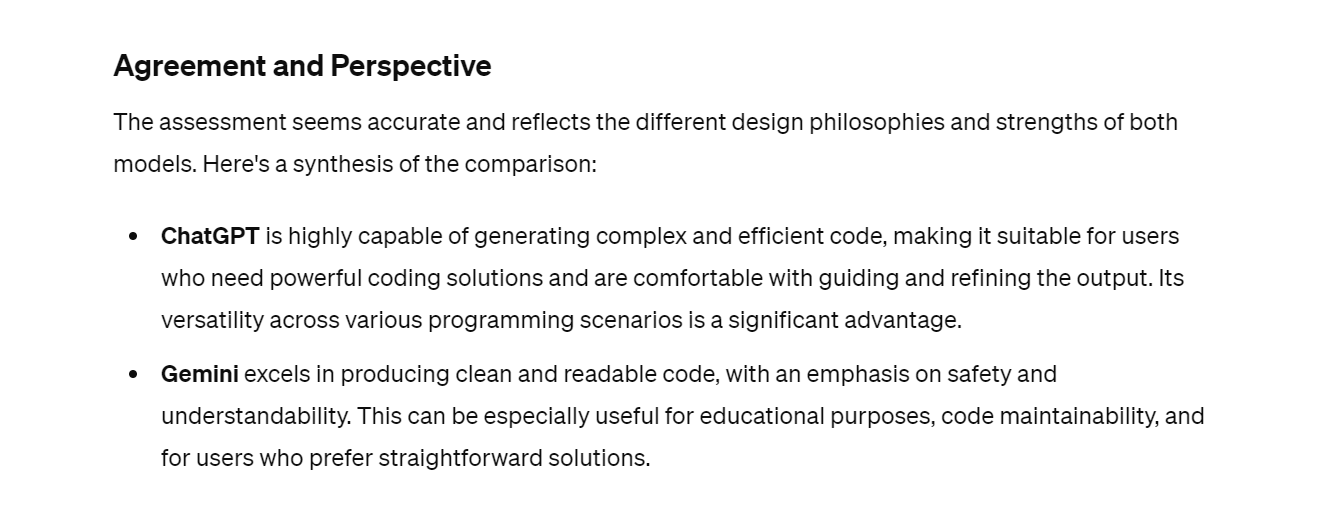

Seems like something I would partly agree with! I asked ChatGPT what it thought of the assessment and it agreed:

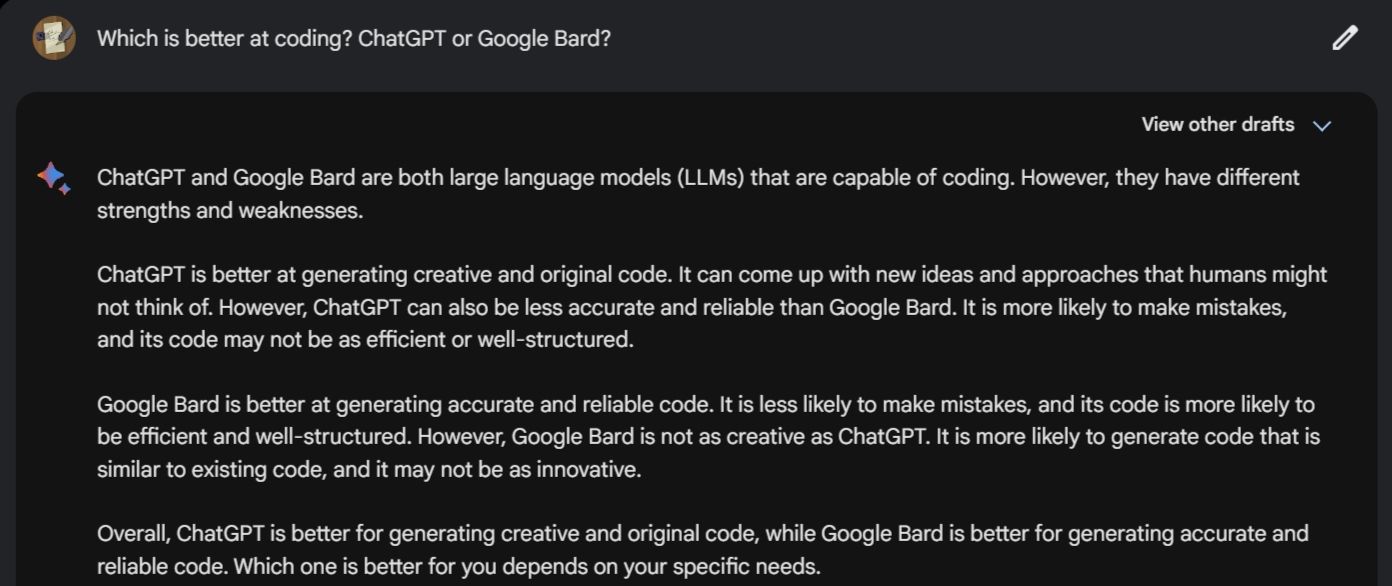

Now, while this seems quite normal, there’s an interesting twist here. Throughout most of last year, Gemini (then Bard) always confidently asserted it could produce better code, was more efficient, and made fewer mistakes. Here is a screenshot from one of my tests in November 2023:

It seems that Gemini is now a little more self-aware and modest!

Programming Features

Neither ChatGPT nor Gemini have major features that are exclusively for programming. However, both chatbots come with features that can significantly boost your programming experience if you know how to use them effectively.

ChatGPT offers an array of features that can streamline the programming process when using the chatbot. Useful additions like Memory and Custom GPT let you customize ChatGPT for your specific programming needs.

For example, the Custom GPT feature can help you create specialized mini versions of ChatGPT for particular projects, by uploading relevant files. This makes tasks like debugging code, optimization, and adding new features much simpler. Overall, compared to Google’s Gemini, ChatGPT includes more features that can enhance your programming experience.

ChatGPT Is in a League of Its Own

Google’s Gemini has enjoyed a lot of hype, so it may come as a surprise to see just how much it lacks in comparison to ChatGPT. While ChatGPT clearly had a head start, you might think Google’s massive resources would help it erode that advantage.

Despite these results, it would be unwise to write off Gemini as a programming aid. Although it’s not as powerful as ChatGPT, Gemini still packs a significant punch and is evolving at a rapid pace.

- Title: Guarding Against Algorithmic Abuse

- Author: Frank

- Created at : 2024-08-16 14:32:01

- Updated at : 2024-08-17 14:32:01

- Link: https://tech-revival.techidaily.com/guarding-against-algorithmic-abuse/

- License: This work is licensed under CC BY-NC-SA 4.0.

KoolReport Pro is an advanced solution for creating data reports and dashboards in PHP. Equipped with all extended packages , KoolReport Pro is able to connect to various datasources, perform advanced data analysis, construct stunning charts and graphs and export your beautiful work to PDF, Excel, JPG or other formats. Plus, it includes powerful built-in reports such as pivot report and drill-down report which will save your time in building ones.

KoolReport Pro is an advanced solution for creating data reports and dashboards in PHP. Equipped with all extended packages , KoolReport Pro is able to connect to various datasources, perform advanced data analysis, construct stunning charts and graphs and export your beautiful work to PDF, Excel, JPG or other formats. Plus, it includes powerful built-in reports such as pivot report and drill-down report which will save your time in building ones.