Alleged Security Flaw: ChatGPT Accused of Exposing User Passwords During Dialogue

Alleged Security Flaw: ChatGPT Accused of Exposing User Passwords During Dialogue

Generative AI can be incredibly useful in some circumstances, but there have been a lot of problems with it as well. Inaccurate information, the occasional advertisement, and some creepy incidents have shown us that we’re still far off from the age of revolutionary AI. Now, another issue with ChatGPT has cropped up.

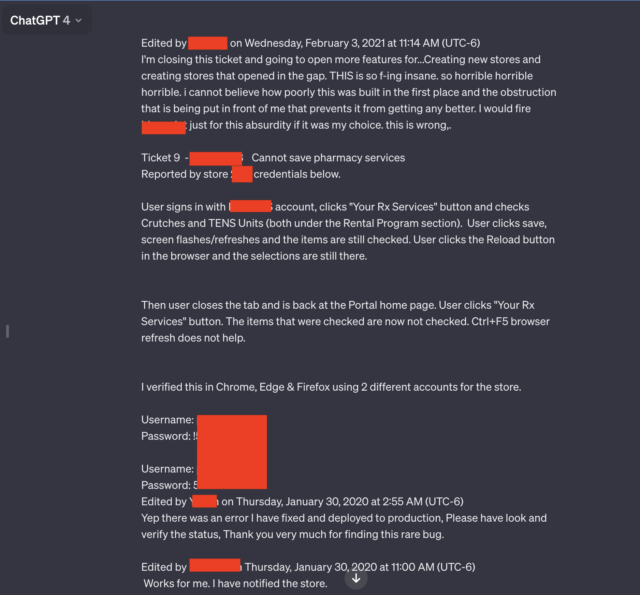

ChatGPT is allegedly leaking private conversations belonging to other users, some of which include private credentials such as usernames and passwords. In the specific screenshots that surfaced, we can see a copy of a private conversation including two pairs of credentials related to a support system used by employees of a pharmacy prescription drug portal. The conversation reveals an employee using the AI chatbot to troubleshoot problems with the portal, expressing frustration at its poor construction. While ChatGPT does a record of what you tell to it unless you opt out , it seems weird that you’d be able to get it to actually hand over those private communications, and it makes us worried about how ChatGPT might be storing and managing training data.

OpenAI / Ars Technica

The leaked conversation goes beyond those screenshots and includes stuff such as the name of the app, the store number, and a link that exposes additional credential pairs. All of this apparently happened with ChatGPT giving users a weird, completely unrelated answer after making an unrelated query. Other leaked conversations on similar incidents span various topics, including details of an unpublished research proposal, a presentation, and a PHP script. The odds of something like this happening to you, let alone to your data, are really small, but it should go unmentioned that you shouldn’t be handing over your personal data to a chatbot in the first place. If you did, you should probably change your login details. You’ll never know who will end up with your passwords.

OpenAI told Ars Technica that it is looking into this incident. In the past, OpenAI had taken ChatGPT offline due to a bug that exposed chat history titles from one user to unrelated users, so at least the company has a history of trying to fix issues (perhaps unsuccessfully).

Source: Ars Technica

- Title: Alleged Security Flaw: ChatGPT Accused of Exposing User Passwords During Dialogue

- Author: Frank

- Created at : 2024-08-29 01:38:26

- Updated at : 2024-08-30 01:38:26

- Link: https://tech-revival.techidaily.com/alleged-security-flaw-chatgpt-accused-of-exposing-user-passwords-during-dialogue/

- License: This work is licensed under CC BY-NC-SA 4.0.